- Published on

- • 9 min read

Authenticating with Google Cloud Platform from R

- Authors

- Name

- Darren Wong

- Socials

- Darren's site

Introduction

We’ve seen in a previous post how to authenticate with Google Cloud Platform (GCP) using service account credentials. There are a few other ways to authenticate with the platform from R:

- Through an interactive authentication process each time (calling

bigrquery::bq_auth()/googleCloudStorageR::gcs_auth()/similar); - Caching the authentication token you get from your first interactive authentication process and re-using it each time you authenticate; or,

- Through Google’s Application Default Credentials.

We’ll focus on the last two here since they are most useful for running non-interactive/automated workflows if you’re unable to get a service account.

I’ll be using the same OS and package versions as in Creating external tables in Google BigQuery using R.

Using cached credentials with gargle

This method makes use of the gargle R package to fetch an authentication token with the correct OAuth 2.0 scopes. This requires you to run the interactive process at least once, and you may need to run it again when your token expires.

- First, load

gargle, then rungargle::token_fetch()with the scopes you’d like access to. Since I’ll mainly be working with BigQuery and Cloud Storage, I’ll pass in the scopes relevant to those services. See the full list of GCP OAuth 2.0 scopes here.- In the back-end,

gargle::token_fetch()will run a list of credential functions in sequence (checking for existing OAuth 2.0 tokens, service/external account credentials, Application Default Credentials etc.) to first check if you have any existing authentication tokens. If it does not find any, it will rungargle::credentials_user_oauth2()which will kick off the process you’re about to see here. For more information on this, see this page in the documentation.

- In the back-end,

library(gargle)

scopes <- c(

"https://www.googleapis.com/auth/bigquery",

"https://www.googleapis.com/auth/cloud-platform"

)

token <- gargle::token_fetch(scopes = scopes)

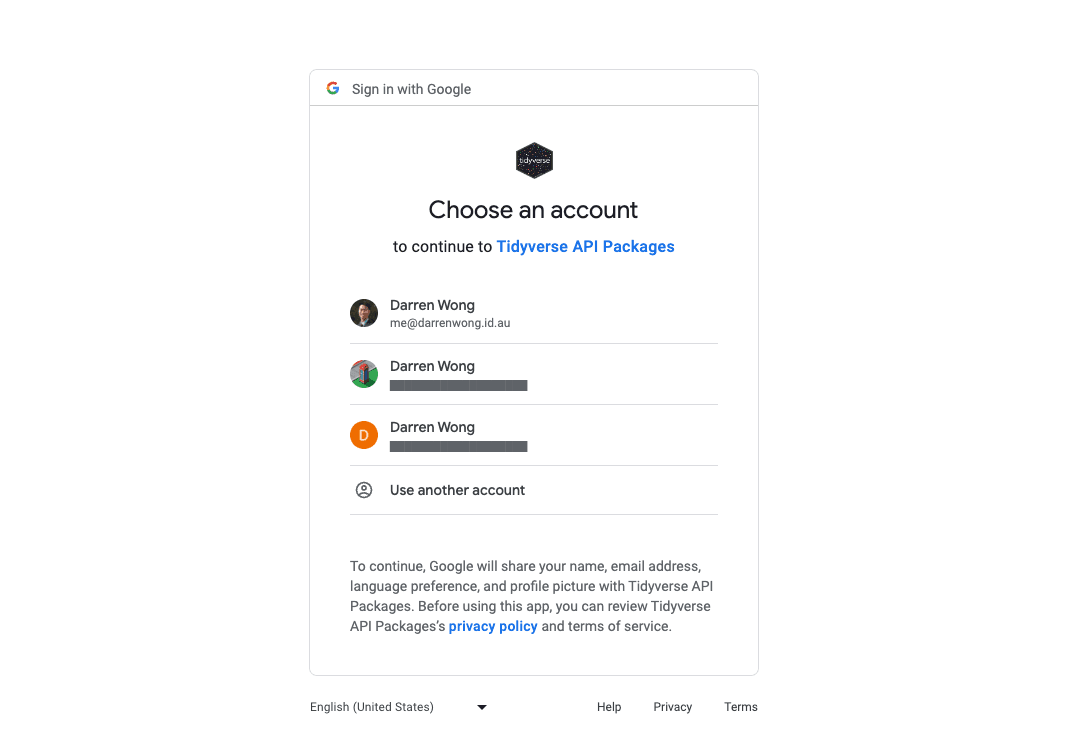

- This will launch a process in your browser where you allow Tidyverse API Packages access to your account. Choose the account you want to use.

- At this stage the page will either return

”Authentication complete. Please close this page and return to R.”, or will provide you with an authorization code you can then copy and paste back into your R console. Once this is complete, the interactive process is done. - You can then start using your

bigrquery/googleCloudStorageRfunctions as normal, although they may prompt you as to whether you’d like to use an existing token for authentication.- To exercise more control over when this happens, we can pass our token explicitly to our authentication functions early in the process, e.g.

bigrquery::bq_auth(token = token);googleCloudStorageR::gcs_auth(token = token) - If this is a project only you are working on, you can also pass an email to the auth functions which will bypass the manual prompt and always choose to use your cached token, e.g.

gargle::token_fetch(email = ‘...’);bigrquery::bq_auth(email = ‘...’) - Otherwise, if working in an organisation with multiple users, you may need to search for cached tokens with email addresses of certain domain names. More info on this in the last section of this article.

- To exercise more control over when this happens, we can pass our token explicitly to our authentication functions early in the process, e.g.

Once you’ve completed these steps, you’ve successfully cached an authentication token and gargle will now look for and load this token whenever you call a function that authenticates with GCP.

Application Default Credentials

Relying on a cached OAuth 2.0 token over the long term that may expire or invalidate may not be the best practice approach to authenticating with GCP. A better option (bar appropriately scoped service accounts) may be to utilise Google Application Default Credentials (ADCs).

Google’s authentication libraries commonly use Application Default Credentials (ADCs) as a best practice strategy to automatically find and manage credentials. In short, you save your ADCs to a specific location on your disk, then point all authentication processes from R to that location. Further documentation is available here.

- To generate Application Default Credentials, you will need to have the gsutil tool installed. Follow the instructions to install it here. I installed this on MacOS Sonoma 14.1.1 on an M1 chip which involved downloading the available package and running

./google-cloud-sdk/install.sh - Once the install is finished, load a fresh terminal session and check if gsutil is available by running

gcloud version

If gcloud can’t be found, you may need to reinstall it with different options.

- Run the following command in your terminal, which will provide you with a link. Copy this link into a browser, follow the prompts, then copy the provided authorization code back into the terminal and press Enter.

gcloud auth application-default login --no-launch-browser

- If this process completed correctly, you should see something like the following:

Credentials saved to file: [~/.config/gcloud/application_default_credentials.json]

- With your ADCs now correctly generated and saved, we can simply point

gargleto it.

adc_path <- "~/.config/gcloud/application_default_credentials.json"

credentials <- gargle::credentials_app_default(scopes = scopes, path = adc_path)

bq_auth(token = credentials)

gcs_auth(token = credentials)

Putting it together

I’m creating a library of BigQuery/Cloud Storage-centric functions that will be used by a limited set of users within a small organisation. I want authentication with these platforms to be as simple and hands-off as possible for these users so that they can focus on the work that really matters instead of having to juggle authentication tokens.

With this in mind, I’ve written a function that leverages the power of gargle::token_fetch(), but aims to keep things as automated as possible. This means that the function will:

- First check for ADCs; if valid ADCs are found then it will return this as a token. This is the preferred method.

- Next, it will check for cached tokens. If any are found, it will filter for tokens that have the required scopes and have an email address that matches the corporate email domain name.

- This could easily be modified to take any token with any email address if this is being run elsewhere.

- This is the additional logic that sets this apart from a normal call to

gargle::token_fetch(). If you don’t need this, it’s likely thattoken_fetch()alone will be sufficient.

- If a token is found that matches these criteria, it will be returned as a token. Otherwise,

gargle::token_fetch()is called to kick off a new interactive authentication process; this is the only case where manual intervention is required.

I then call this function in each of my BigQuery and Cloud Storage connection functions to authenticate with each platform.

GCP authentication functions

#' Authenticate with GCP

#'

#' Uses [gargle::token_fetch()] to generate a token that can be passed to

#' [bigrquery::bq_auth()] and [googleCloudStorageR::gcs_auth()]. Will look at

#' the cache ([gargle::gargle_oauth_sitrep()]) to see if there are any cached

#' tokens and will use the one with the '@organisation.org' email address by

#' default.

#'

#' @param force Bool, whether to force interactive authentication

#' @param adc_path Path override for location of Application Default Credentials

#' @param force_cache Bool, force the usage of cached tokens and not ADCs

#' @param ... Args to pass to [gargle::token_fetch()]

get_token <- function(

adc_path = NULL,

force_cache = FALSE,

silent = FALSE,

...

) {

scopes <- c(

"https://www.googleapis.com/auth/bigquery",

"https://www.googleapis.com/auth/cloud-platform"

)

if (!force_cache & !silent) log_info('Checking {gargle:::credentials_app_default_path()} for Application Default Credentials')

# Check

if (file.exists(gargle:::credentials_app_default_path()) & !force_cache) {

credentials <- gargle::credentials_app_default(scopes = scopes, path = adc_path)

if (!is.null(credentials)) return (credentials)

}

if (!silent) {

log_info('No ADCs found, generate in Terminal with `gcloud auth application-default login --no-launch-browser`')

log_info('Falling back on cached tokens stored in ~/.cache/gargle (if any)')

}

# First check if any tokens are cached

sitrep <- with_gargle_verbosity(

'silent',

gargle_oauth_sitrep()

) %>%

as_tibble()

valid_cached <- sitrep %>%

filter(

str_detect(scopes, 'bigquery') &

str_detect(scopes, 'cloud-platform') &

str_detect(email, 'organisation.org')

) %>%

pull(email)

if (length(valid_cached) > 0) {

token <- gargle::token_fetch(email = valid_cached[[1]], scopes = scopes, use_oob = TRUE)

} else {

# Opens up an interactive auth window - follow the instructions on screen

# Caches authentication token - unsure when this is invalidated

token <- gargle::token_fetch(scopes = scopes, use_oob = TRUE, ...)

}

# Return

token

}

# BigQuery/Cloud Storage connectors ----

#' Connect to BigQuery

#'

#' @param json Whether to authenticate interactively (`NULL`), or to use a

#' service account JSON

#' @param project Project your target BigQuery database sits in

#' @param dataset The BQ dataset you want to connect to

#' @param billing Project billing (usually same as your `project`)

connect_bigquery <- function(

json = NULL,

project = 'project_name',

dataset = 'dataset_name',

billing = NULL,

...

) {

if (is.null(billing)) billing <- project

# If JSON path isn't NULL, authenticate with the specified JSON and set

# default dataset for session

if (!is.null(json)) {

bq_auth(path = json)

bq_project_datasets(dataset)

} else {

# Otherwise, use `gargle` to use existing ADCs or generate a token

token <- get_token(silent = TRUE)

bq_auth(token = token)

}

# Connect to BQ

bq_con <- dbConnect(

bigrquery::bigquery(),

project = project,

dataset = dataset,

billing = billing

)

return (bq_con)

}

#' Connect to Cloud Storage

#'

#' Opens a connection to GCS, returns only details on the connection

#'

#' @param json Whether to authenticate interactively (`NULL`), or to use a

#' service account JSON

#' @param project Project your target Cloud Storage bucket sits in

#' @param bucket The bucket you want to connect to

connect_cloudstore <- function(

json = NULL,

project = 'project_name',

bucket = 'bucket_name',

up_lim = 2e+9L,

...

) {

# If JSON path isn't NULL, authenticate with the specified JSON and set

# default dataset for session

if (!is.null(json)) {

gcs_auth(json_file = json, ...)

} else {

# Otherwise, use `gargle` to use existing ADCs or generate a token

token <- get_token(silent = TRUE)

gcs_auth(token = token, ...)

}

# Set global bucket for session

gcs_global_bucket(bucket)

# Set max 'simple' upload file size to 2gb (instead of 5mb)

gcs_upload_set_limit(upload_limit = up_lim)

return (

list(

project = project,

bucket = bucket,

up_lim = up_lim

)

)

}